People data is the information that organizations collect and analyze about their employees, customers, and other stakeholders. It can include demographic, behavioral, performance, and feedback data from various sources, such as human resources information systems (HRIS), applicant tracking systems (ATS), talent management systems, learning management system (LMS), customer relationship management (CRM), enterprise resource planning (ERP), and more.

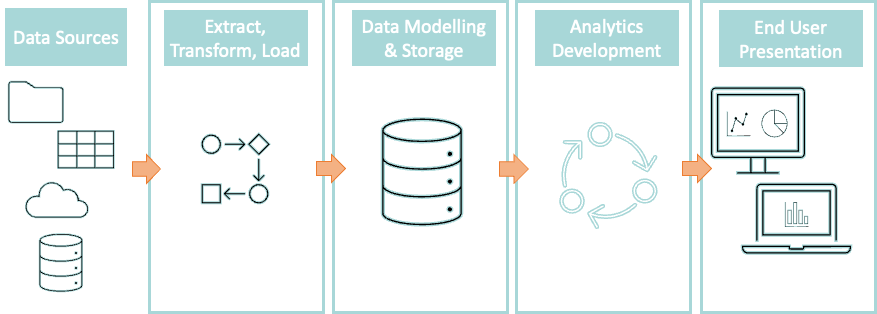

People data can help organizations gain insights into their workforce and business performance, identify opportunities for improvement, and drive strategic decisions. However, people data is often federated across different systems, formats, and silos, making it difficult to access, integrate, and analyze. To overcome this challenge, organizations need systematic process to turn their disparate people data sources into a strong foundation on which real-time analytics can be created and distributed to end users.

The process consists of four main steps: extract, transform, load (ETL); data modeling and storage; data analytics development; and end user presentation and adoption. Let’s look at each step in more detail.

Extract, transform, load (ETL)

The first step is to extract relevant people data from the various sources and load it into a centralized location, such as a local staging area or a cloud-based tool. This step may involve connecting to different APIs, databases, files, or web services to access the data. The extracted data may be structured (e.g., tables), semi-structured (e.g., XML files), or unstructured (e.g., images).

The next step is to transform the data to make it consistent, accurate, and ready for analysis. This step may involve cleaning, filtering, aggregating, joining, reshaping, or enriching the data. For example, you may want to remove duplicates, outliers, or missing values; standardize the formats and units; merge data from different sources; or add new variables or calculations.

There are various tools and platforms that can help you perform and automate ETL tasks, such as Microsoft Power BI, Informatica PowerCenter, Apache Airflow, IBM Infophere Datastage, Talend Open Studio, Alteryx, and many others. These tools can help you automate the ETL process and schedule regular data updates.

Data modeling and storage

The second step is to design and implement a data model that defines how the transformed data will be organized, stored, and accessed in a data warehouse or a data lake. A data warehouse is a repository of structured and processed data that has been optimized for analytical queries. A data lake is a repository of raw or semi-processed data that can accommodate various types of data and support different kinds of analysis.

The choice between a data warehouse and a data lake depends on several factors, such as the volume, variety, velocity, and veracity of the data; the analytical needs and use cases; the available resources and skills; and the security and governance requirements. In some cases, organizations may use both a data warehouse and a data lake to leverage their respective strengths.

A common approach to data modeling is to use a multidimensional or star schema, which consists of a central fact table that contains the measures or metrics of interest (e.g., performance rating) and several dimension tables that contain the attributes or categories that describe the facts (e.g., demographics, department). This schema allows for fast and flexible analysis across multiple dimensions.

Another important aspect of data modeling is master data management (MDM), which is the process of ensuring that the key entities or concepts in the organization (e.g., employee, customer) are defined consistently and accurately across different systems and sources. MDM helps avoid inconsistencies, redundancies, or conflicts in the data.

There are various tools and platforms that can help you build and manage your data warehouse or data lake, such as Microsoft Azure Synapse Analytics, Amazon Redshift, Google BigQuery, Snowflake, or IBM Db2 Warehouse. These tools can help you scale your storage capacity and performance according to your needs.

Data analytics development

The third step is to develop the analytical models and methods that will generate insights from the stored data. This step may involve applying various techniques, such as descriptive analytics (e.g., summary statistics), diagnostic analytics (e.g., root cause analysis), predictive analytics (e.g., forecasting), prescriptive analytics (e.g., optimization), or exploratory analytics (e.g., hypothesis testing).

This step may also involve using advanced methods, such as machine learning (ML) or artificial intelligence (AI), to discover patterns, trends, anomalies, or relationships in the data that are not obvious or intuitive. For example, you may want to use ML or AI to segment your employees based on their working format and location preferences; classify your employees based on their performance or engagement; or recommend development resources and plans based on their needs or interests.

There are various tools and platforms that can help you develop and deploy your data analytics models and methods, such as Microsoft Azure Machine Learning, Amazon SageMaker, Google Cloud AI Platform, IBM Watson Studio, or SAS Visual Analytics. These tools can help you automate the data analytics process and integrate it with your data warehouse or data lake.

End user presentation and adoption

The final step is to present and communicate the insights from the data analytics to the end users in a clear, concise, and compelling way. This step may involve creating dashboards, reports, or visualizations that display the key findings, metrics, or indicators in an interactive and intuitive manner. The goal is to enable the end users to explore, understand, and act on the insights. With predictive or prescriptive analytics, it may be beneficial to integrate the analytics outputs directly into the relevant systems or applications via API to optimize process efficiency.

This step may also involve providing training, guidance, or support to the end users to help them adopt and use the data analytics effectively. This may include explaining the data sources, definitions, assumptions, limitations, or interpretations of the data analytics; demonstrating the features, functions, or benefits of the dashboards, reports, or visualizations; or soliciting feedback, suggestions, or questions from the end users.

There are various tools and platforms that can help you create and share your data analytics dashboards, reports, or visualizations, such as Microsoft Power BI, Tableau, Qlik Sense, Looker, Klipfolio, or Zoho Analytics. These tools can help you customize your data presentation and distribution according to your audience and purpose.

Conclusion

Turning people data into actionable insights is a complex but rewarding process that can help organizations improve their workforce and business performance. By following the four steps of ETL, data modeling and storage, data analytics development, and end user presentation and adoption, you can build a strong foundation for your data analytics and deliver value to your stakeholders.